Hey guys, back with my weekly blog update on my real time car commercial project!

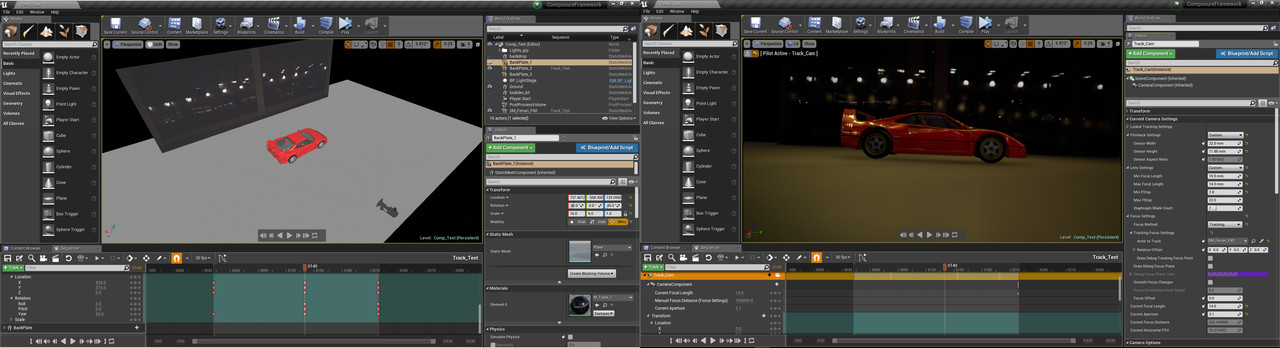

Here's how it looks like in Unreal Engine 4, rendered in real time using an updated studio light rig. I've also updated the car paint shader with a dark tone layer to give an additional fake occlusion pass. The additional roughness map helps me to recreate a fake ferrari car paint wavy reflections. I've also updated the post process volume so it gives higher resolution in AO, shadow and bounce light reflections.

I've also rendered an image based lighting turntable setup using the HDRI we captured from River St. My biggest issue with the hdri is the shadow quality shown in real time. Based on this result, the HDRI will most likely only be used for reflection capture for the car, but I will have to use Unreal light system to give a proper shadow pass.

In addition to the refined shaders and textures, I've also started playing with compositing in unreal. I tried to recreate the image plane projection setup that I usually used in Maya using a plane and the cinecam in sequencer. This setup works as a compositing test, but I'll need to update it after getting the final live action back plate.

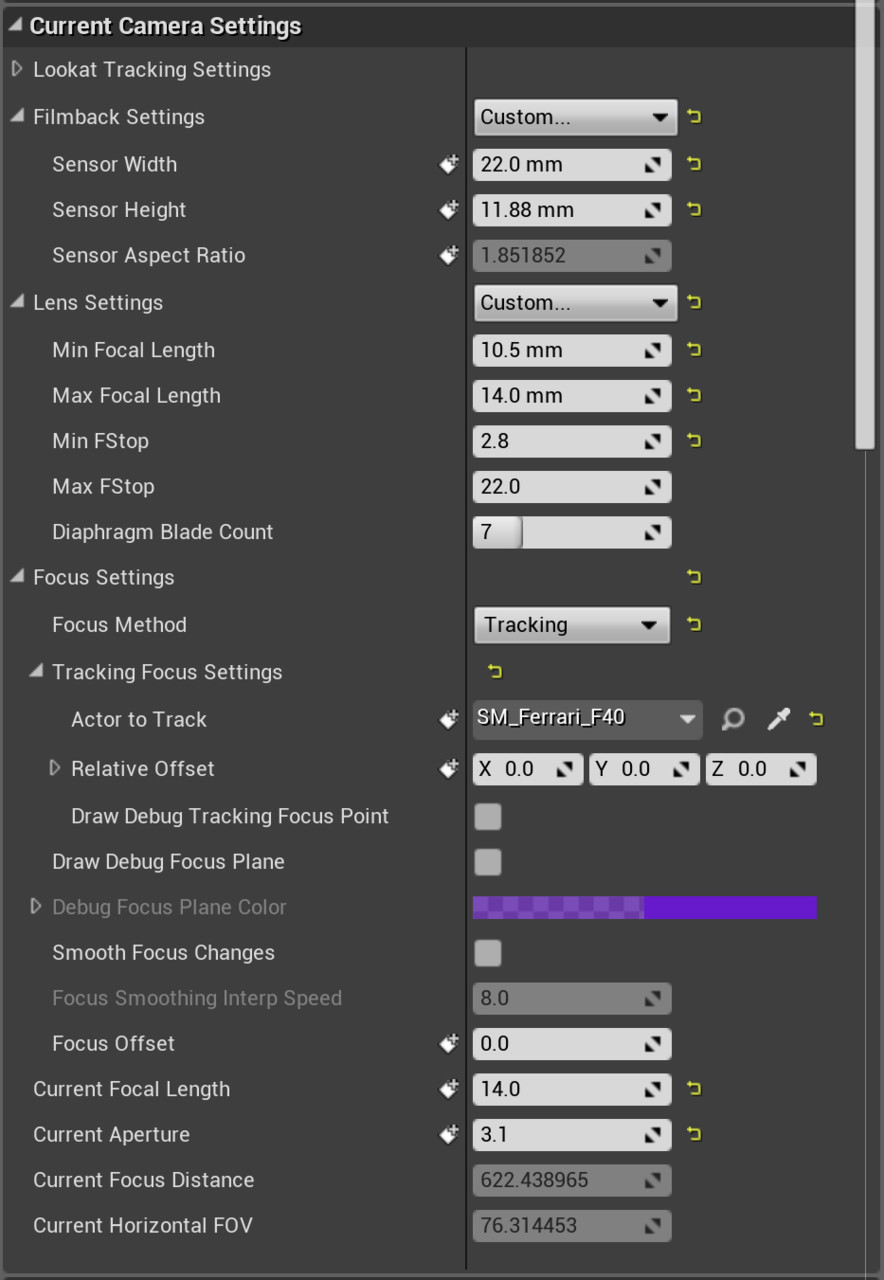

One of the things that's quite important to match the camera track was to get the exact camera settings inside Unreal. After researching on the Blackmagic Ursa sensor type along with the lens settings online, I came up with this data to use :

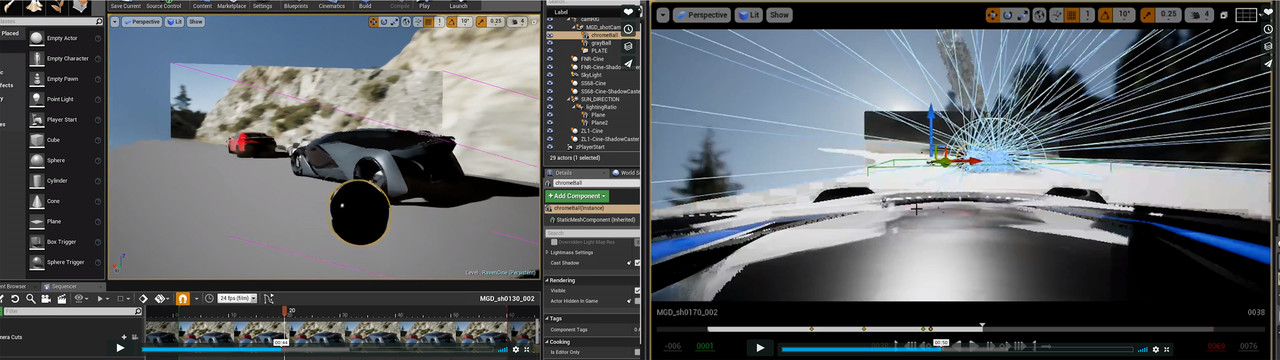

Lastly, I started studying the magic behind 'The Human Race' made by The Mill in 2017. The real time commercial has been our biggest project inspiration, especially as our team are specifically designed to experiment the possibilities of real time engine. One of the more noticeable scene setup was the fact that they have a plugin to feed live action footage straight to unreal. Which looks similar to my own image plane setup.

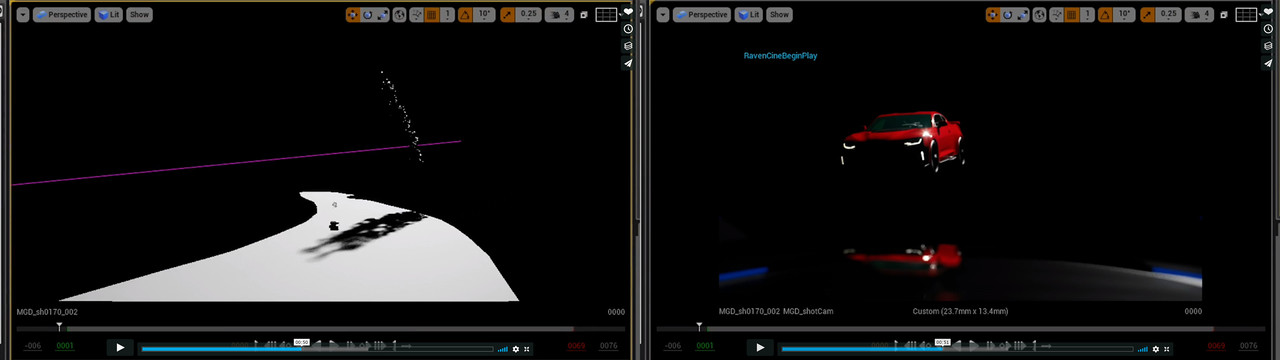

A few things that I noticed from the making of video are some of the render passes that they used to export for compositing. Looking at their lighting setup, it looks like they only use a directional light which can be seen through the shadow pass. They've also exported a simple reflection pass using a glass-like material on the ground plane while most likely composited the real time HDRi using their massive blackbird rig.

Another thing to note about, it seems that they've always put a motion blur whenever the car is in front of the camera, limiting what we can see clearly in a close up angle. In addition, they also have a harder time to show a photo-realistic render of the car in a dark area. Especially this shot in the tunnel where you can see the limitation of real time rendering, which they ended up coated it with additional FX work to distract our eyes from the car themselves.

This is by no means trying to disrespect what they did, they've shown an incredible achievement using a real time engine. My main goal on studying their work is mainly to set a realistic goal for our own project. While we are still being mentored by people from The Mill, we don't have any access to a similar workstation setup that they have when making the short film. In the end, I feel like we're heading towards the right direction for the commercial project.

Well then, see you on the next blog update!