Thesis Blog Part. 1 | Figuring out the Photogrammetry Pipeline

Work In Progress / 18 January 2019

Realtime Car Commercial Blog, Part 9. Wrapping Up

Work In Progress / 14 November 2018

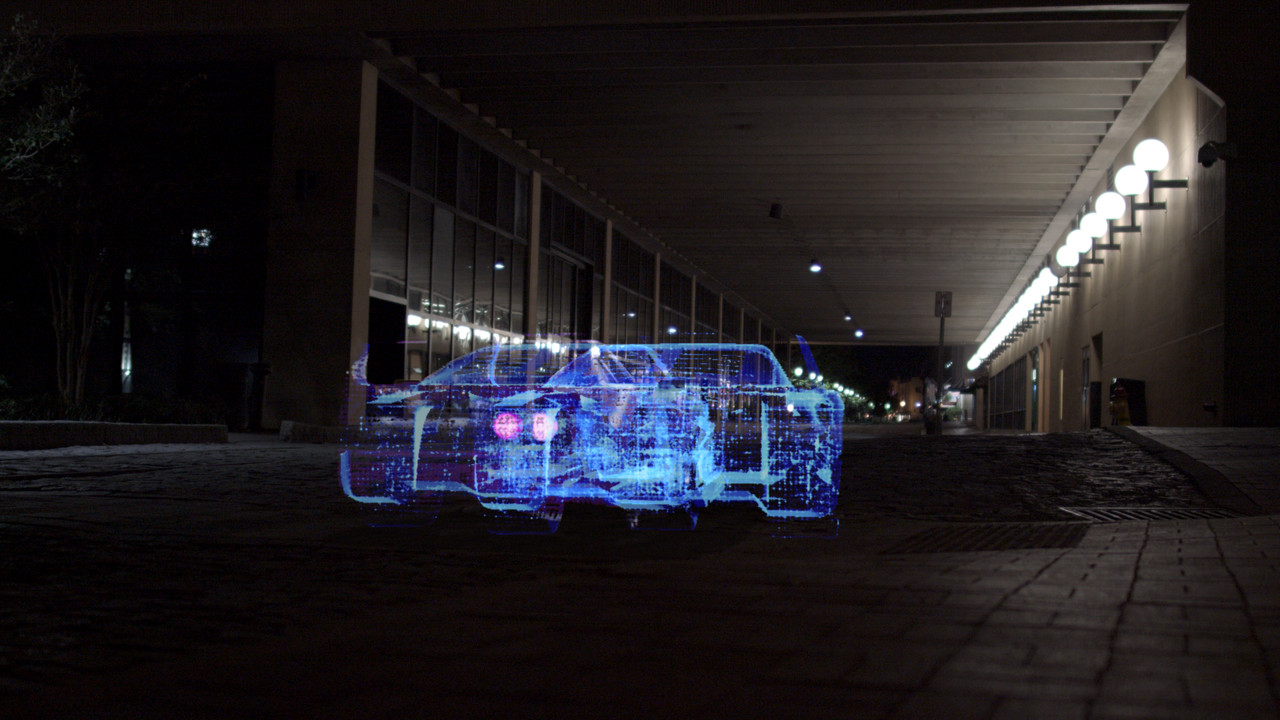

Realtime Car Commercial Blog, Part 8. Importing FX and Compositing

Work In Progress / 06 November 2018

Realtime Car Commercial Blog, Part 7. Troubleshooting Fest

Work In Progress / 31 October 2018

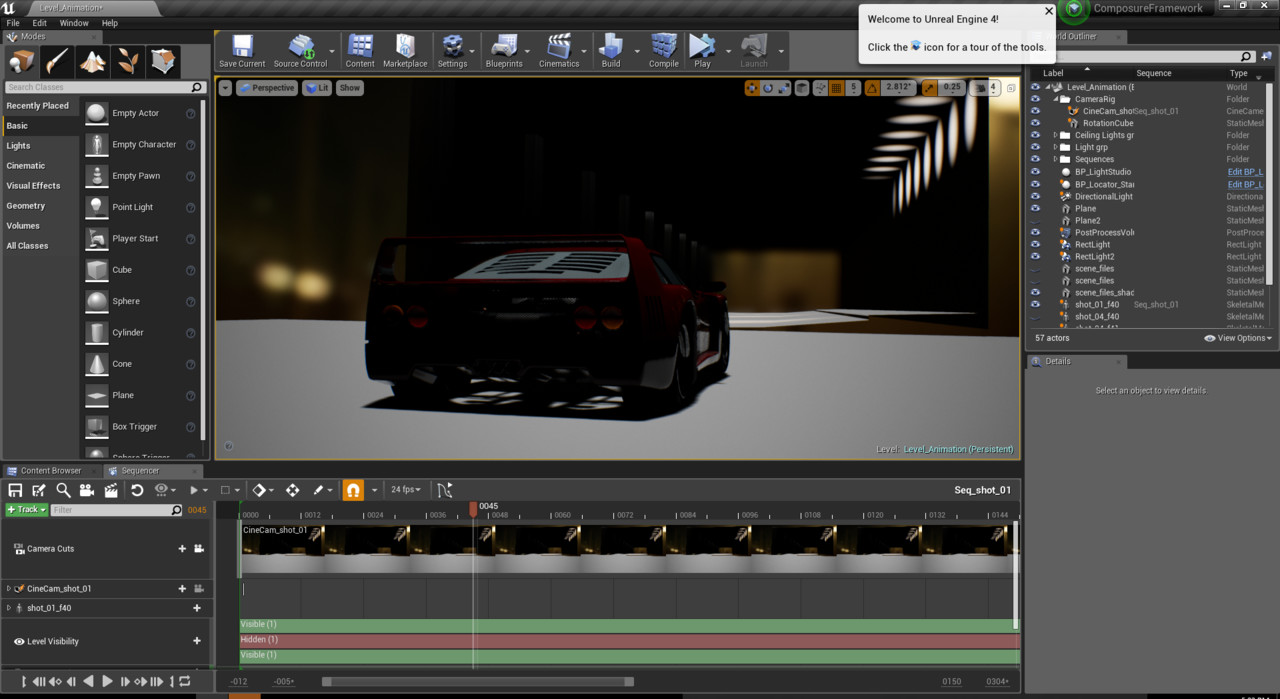

Realtime Car Commercial Blog, Part 6. Refining the Real-time Pipeline

Work In Progress / 23 October 2018

Realtime Car Commercial Blog, Part 5. Camera Track Fix

Work In Progress / 16 October 2018

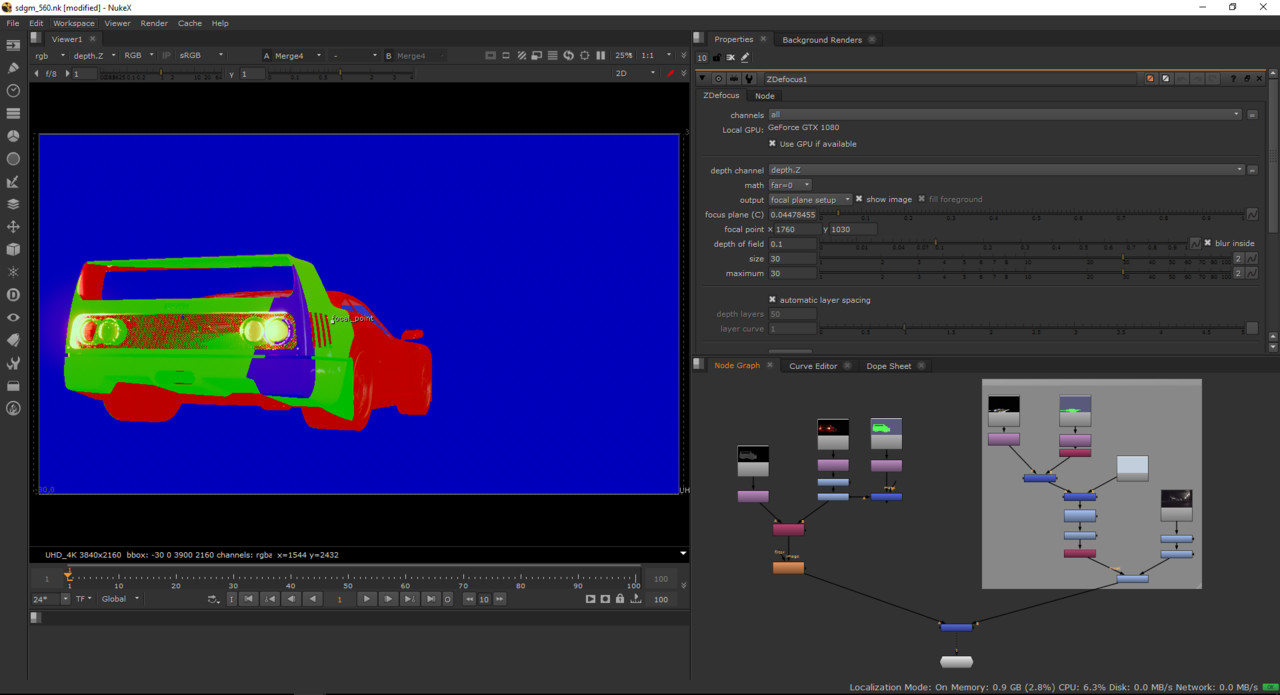

Realtime Car Commercial Blog, Part 4. Compositing Test

Work In Progress / 09 October 2018

Realtime Car Commercial Blog, Part 3. Look Development

Work In Progress / 02 October 2018

Realtime Car Commercial Blog, Part 2. R&D

Work In Progress / 25 September 2018

Realtime Car Commercial Blog, Part 1. Concept

Work In Progress / 18 September 2018